- Customize Final Title Tag surerank_final_title

- Customize Post Type Archive Title Output surerank_post_type_archive_title

- Customize Archive Page Title with surerank_archive_title

- CustomizeModify the Search Results Page Title surerank_search_title

- Customizing the 404 Page Title surerank_not_found_title

- Customizing the Title Separator surerank_title_separator

- How to Remove Archive Prefixes from Titles Using SureRank

- Customize Homepage Pagination Format surerank_homepage_pagination_format

- Customize Maximum SEO Title Length surerank_title_length

- Enable/Disable Pagination in SureRank Archives surerank_show_pagination

- How to Fix WWW and Non-WWW Version Redirects to Improve Your SEO

- How to Fix: No H1 Heading Found on Your Homepage

- How to Fix Missing H2 Headings on Your Homepage

- Re-run Checks Button in SureRank

- Fix Critical Error: Another SEO Plugin Detected in SureRank

- Fix Warning: Site Tagline Is Not Set in SureRank

- How to Fix Multiple SEO Plugins Detected on Your Site

- How to Fix: Homepage is Not Indexable by Search Engines

- Warning: Homepage Does Not Contain Internal Links

- How to Fix Missing Alt Text on Homepage Images

- How to Fix Missing Canonical Tag on Your Homepage

- How to Fix Missing Open Graph Tags on Your Homepage

- How to Fix Missing Structured Data (Schema) on Your Homepage

- How to Fix XML Sitemap is Not Accessible in SureRank

- How to Fix Search Engine Visibility Blocked in WordPress

- Connect Google Search Console

- How to Fix Site Not Served Over HTTPS in SureRank

- How to Fix Robots.txt File Accessibility Issues in SureRank

- How to Fix Missing Search Engine Title on Your Home Page

- How to Fix Home Page is Not Loading Correctly

- How to Fix: Search Engine Title is Missing on the Page

- Page Level SEO: Broken Links Detected

- How to Fix Missing Alt Text on Images

- How to Fix Page URLs That Are Too Long

- Page Level SEO Warning: No Links Found on This Page

- Page Level SEO Warning: No Images or Videos Found

- Page Level SEO Warning: Missing Search Engine Description

- Page Level SEO Warning: No Subheadings Found on This Page

- Page Level SEO Warning: Canonical Tag is Missing

- Page Level SEO Warning: Open Graph Tags Missing

How to Fix Robots.txt File Accessibility Issues in SureRank

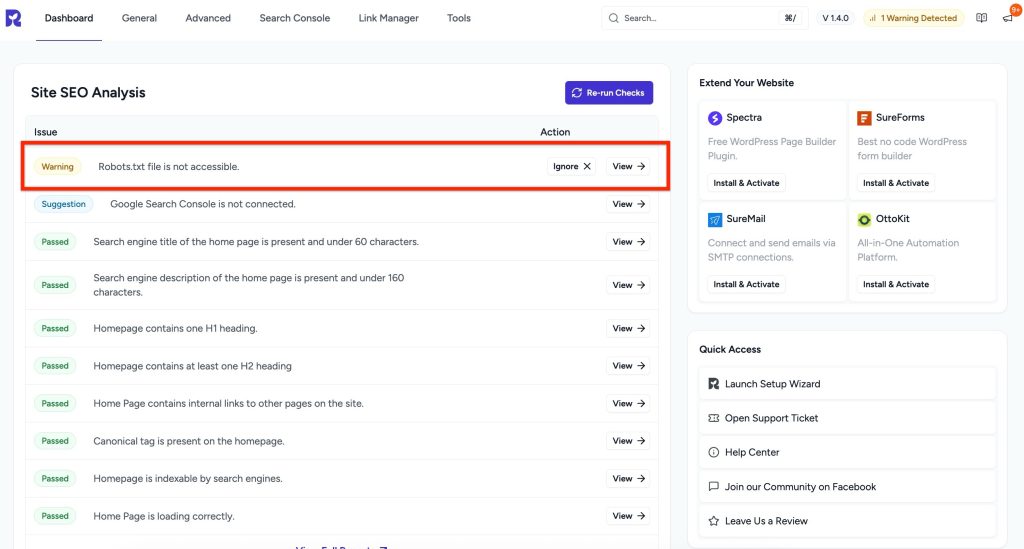

If you’ve received a critical error in SureRank stating: “Robots.txt file is not accessible”.

This guide will help you understand what this means, why it’s important for SEO, and how to fix it even if you’re not a technical expert.

What’s the Problem

The robots.txt file is a simple text file in your website’s root directory that tells search engines which pages or sections of your site to crawl or avoid.

If this file is inaccessible:

- Search engines may not index your site properly.

- Important pages might be ignored or blocked from search results.

- SEO performance can be negatively affected.

Common causes:

- robots.txt file is missing from the root folder.

- Incorrect file permissions are preventing access.

- Server or security rules are blocking search engine crawlers.

Why This Matters

- Search engine visibility: If robots.txt is blocked, Google or other search engines may not index your pages correctly.

- Crawl efficiency: Search engines may waste resources crawling pages you don’t want them to or skip important pages entirely.

- SEO health: Without proper crawling, your site’s search ranking potential is reduced.

How to Fix

The Robots.txt file is automatically handled by SureRank after activation. If you don’t see it, follow the steps below.

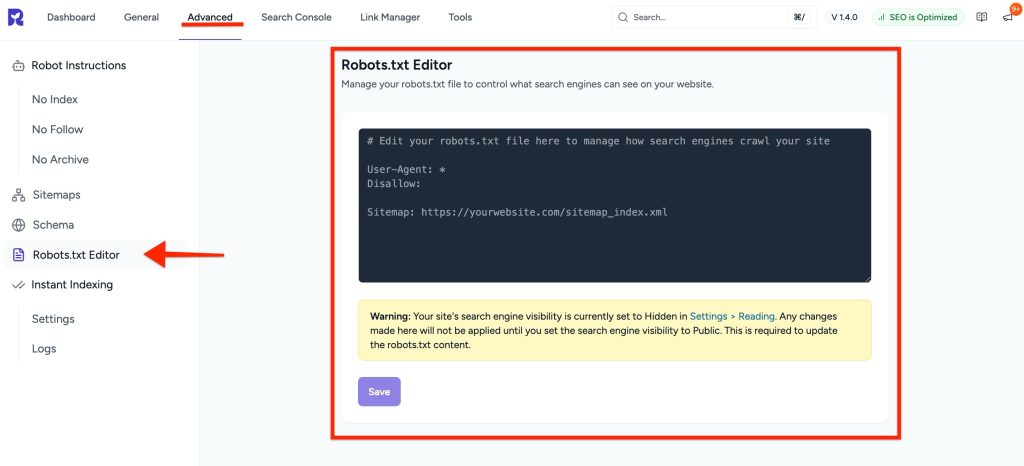

Step 1 – Access the Robots.txt Editor

- Go to your WordPress dashboard.

- Navigate to SureRank → Advanced Settings → Robots.txt Editor.

Step 2 – Edit Robots.txt

- If the file doesn’t exist, SureRank will allow you to create a default robots.txt file.

- You can add rules to allow search engines to crawl your site. Example:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://yourwebsite.com/sitemap_index.xmlStep 3 – Save Changes

- Click Save Changes to apply your edits.

- If you use a caching plugin, clear your site cache to ensure the updated robots.txt is served.

Step 4 – Check File Permissions

- Ensure the robots.txt file is readable by the web server.

- Recommended permissions: 644 (owner can write, everyone can read).

Step 5 – Test Robots.txt Accessibility

- Open your browser and visit: https://yourwebsite.com/robots.txt

- Ensure there are no 403 or 404 errors.

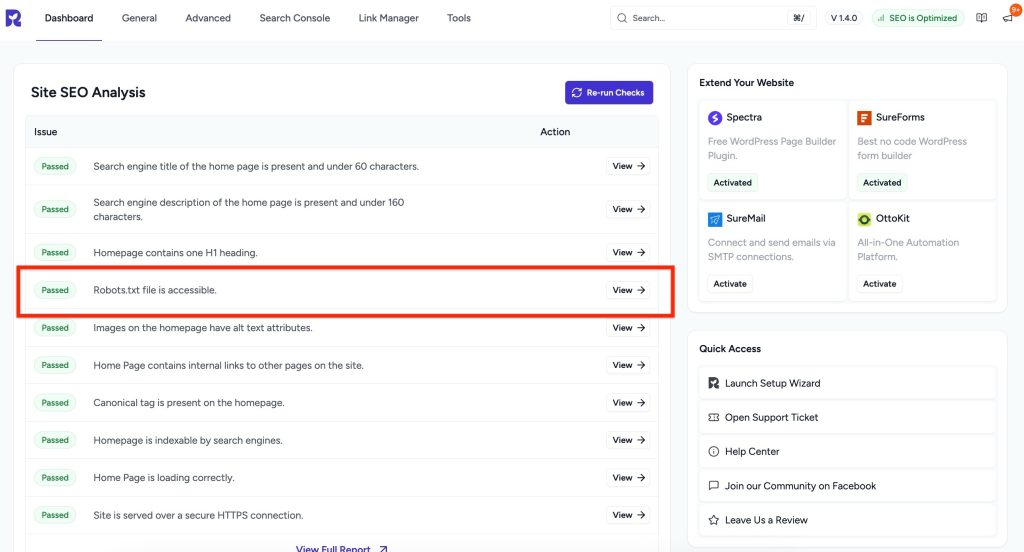

Step 6 – Re-run Site SEO Analysis

- After making changes, Re-run Checks in SureRank.

- The warning should disappear. Robots.txt file is accessible.

Was this doc helpful?

What went wrong?

We don't respond to the article feedback, we use it to improve our support content.

On this page